Hit by the June 2019 Google Core update? Ranking factors that might help you recover…

Just recently, Google rolled out two different algorithm updates that once again had every SEO agency on its toes. As if last year’s Medic update didn’t cause enough uproar, Google’s June 2019 Broad Core Algorithm update is harboring a huge clamor in the SEO community across the globe.

The June 2019 Core Update is now live and rolling out to our various data centers over the coming days.

— Google SearchLiaison (@searchliaison) June 3, 2019

If you’ve been hit, here’s what John Mueller, Webmaster Trends Analyst at Google, suggest that you start doing.

Below is a full transcript of the video from 26:14 to 28:25

I’ve heard this a few times, I think it’s a bit tricky because we’re not focusing on something very specific, where we’d say, for example when we rolled out the Speed update, that was something where we could talk about specifically this is how we’re using mobile speed and this is how it affects your website, therefore you should focus on speed as well.

With a lot of the relevance updates, a lot of kind of quality updates, the core updates that we make, there’s no specific thing where we’d be able to say, “you did this, and you should have done that, and therefore we’re showing things differently.” Sometimes the web just evolves, sometimes what users expect evolves, and similarly, sometimes our algorithms and the way we try to determine relevance evolve as well.

And with that, like you mentioned you probably [have] seen the tweets from SearchLiaison, there’s often nothing explicit that you can do to [kind of] change that.

What we do have is an older blog post from Amit Singhal * which covers a lot of questions that you can ask yourself about the quality of your website, that’s something I’d always recommend going through, that’s something that I would also go through with people who are not associated with your website. So often you as the site owner, you have an intimate relationship with your website, [and] you know exactly that it’s perfect. But someone who’s not associated with your website might look at your website and compare it to other websites and say, “Well, I don’t know if I could really trust your website, because it looks outdated, or because I don’t know who these people are who are writing about things.”

All of these things play a small role, and it’s not so much that there’s any technical thing that you can change and you line up HTML or server settings. It’s more about the overall picture where users nowadays would look at and say, “Well, I don’t know if this is as relevant as it used to be because of these vague things that I might be thinking about.”

* Amit Singhal is a previous Google exec who was part of the Search Team for 15 years. We reviewed his blog post and deep-dived into the cases of websites who fell short after the recent update to understand the common reasons why they failed, and how other webmasters can recover and learn from that. These and more will be discussed below.

Your website’s relevance to search relies on the whole user experience and that breaks down to, (1) the quality of content your showing, (2) the E.A.T (expertise, authority, and trustworthiness) of each individual article, and (3) your website’s overall quality.

In the interview, Mueller specifically mentioned reviewing Singhal’s blog post about how to improve the quality of your site. Reiterating that there’s no specific fix, he adds that sometimes, familiarity with your website leads you to often not see areas that needed improvement. And so having a third-party check the overall experience and quality of your site and its content is one way to know whether your website is as high quality as you think it is.

Essentially, what this means is that webmasters must be able to see and adapt to the changes of user behavior when it comes to search because Google will always base their rankings on that. The call is to constantly optimize and improve where needed, depending on how users interact with your website and its content.

Quick Rundown:

What was the June 2019 Broad Core Update all about?

Despite Mueller’s explanation, a huge number of people from the WebmasterWorld are still calling foul against Google for not showing any transparency about what’s going on. Google SearchLiaison’s explanation also dates back to the guidelines they have mentioned in a tweet October last year.

Sometimes, we make broad changes to our core algorithm. We inform about those because the actionable advice is that there is nothing in particular to “fix,” and we don’t want content owners to mistakenly try to change things that aren’t issues…. https://t.co/ohdP8vDatr

— Google SearchLiaison (@searchliaison) October 11, 2018

And in the previous Core Algorithm Update’s thread, Danny Sullivan, Google’s Public Liaison of Search, also reiterated on a tweet there’s nothing specific to fix on broad core updates.

“We do these several times per year” and “there’s nothing wrong with pages” are key here. We will refresh our systems, but there’s also nothing specific for you to be improving for that.

— Danny Sullivan (@dannysullivan) March 28, 2018

However, the speculations that Google is specifically targeting a specific set of ‘verticals’ or niches in this update is clearly debunked since it is evident that a lot of prominent websites from various niches have been hit by the said update. The variety of websites that experienced drastic traffic and ranking loss is also observable in several Google Search Console threads.

Among them is DailyMail.uk and as explained in an original post by Jesus Mendez, they lost over 50% of their traffic 24 hours after the June Broad Core update.

Despite his protest, another member of the community replied to the said thread and thinks it was expected of the news site to have been hit. He mentioned how the site had too many adverts, “cheap, low-quality adult pictures,” low-quality content, and how it takes over 12 seconds to load a page in Lighthouse.

Perhaps the most controversial case is CCN (CryptoCoinsNews), who had to shut down their operations because they lost over 70% of traffic overnight resulting in a 90% decrease in ad revenues. This was announced in a blog post written by CCN founder Jonas Borchgrevink.

Many cryptocurrency webmasters and enthusiasts were astonished at how a high-value news outlet with more than 50 employees shut down almost overnight because of a seasonal Google algorithm update. Although CCN Founder Borchgrevink thinks they were being personally attacked by the search engine giant, others think otherwise.

Based on the replies on Borchgrevink Google search console post, a few think this has to do with the website being under the YMYL (Your Money or Your Life) category.

SEMrush says YMYL websites are those which give health, financial, safety, or happiness advise that can negatively impact a person’s life, income, or happiness IF they are low quality. A lot of websites fall under this category, primarily websites that talk about cryptocurrency and health.

Silver Product Expert Nikolaj Antonov replied on Borchgrevink’s thread how YMYL and E.A.T (Expertise, Authority, and Trustworthiness) affected the website’s position.

Aside from the above-mentioned factors, other product experts also cited how the flaws of the website’s movement from the old domain name (cryptocoinsnews.com) to the new one (ccn.com) could have affected their ranking. The misuse of 301 redirects on 404 pages to the new domain’s Home Page is causing confusion on Google’s algorithm.

Aside from that, factors such as low-quality content and being outperformed by competition are also being considered by other product experts as the reasons why CCN experienced a huge drop in their rankings.

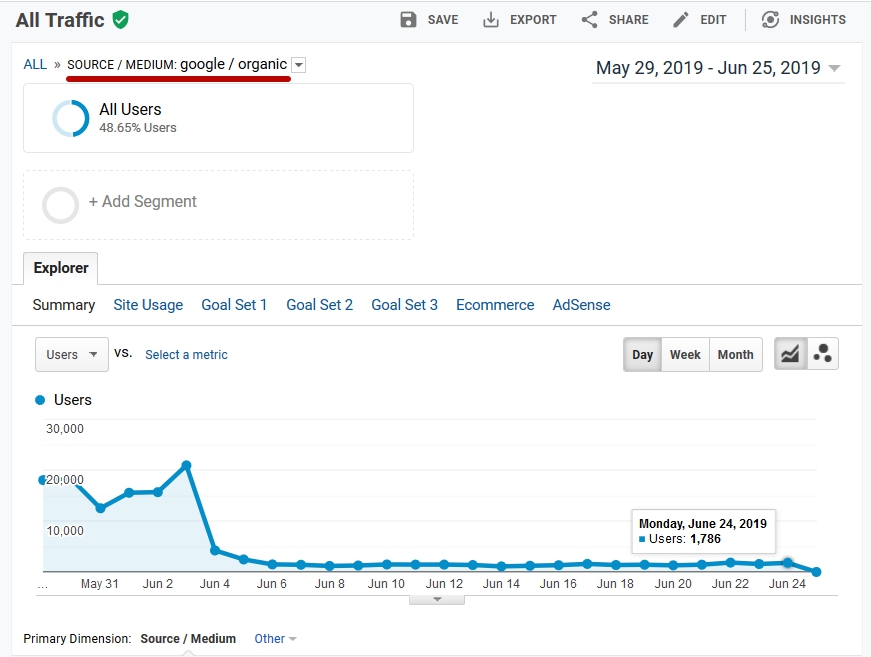

Interestingly, another cryptocurrency site u.today also created their own thread about how they were badly affected by the update as well.

Despite the allegations, Google denies blacklisting cryptocurrency news sites. Cryptocurrency site Cointelegraph also clarified that they are still visible on Google and did not experience a significant drop or increase in their traffic like other websites in their industry.

Based on what we’ve seen from various search console threads, there are hosts of reasons why these websites fell short, and among the commonly mentioned factors are the following:

- Private Whois Information.

- No Contact page, no telephone number, or street address.

- Lack of real business information.

- No editorial oversight.

- Misuse of redirects.

- Use of black hat tactics like cloaking.

- Not using https.

- Lack of E.A.T.

- Slow page load time.

- Bad user experience (too many ads, pop-ups, clicks)

- Low-quality content.

- Improper internal linking.

- Not optimized for mobile users or lack of mobile responsiveness.

- Outdated content.

- Click-bait titles and unrelevant/ zero-value content.

- Duplicated or spun content.

These factors clearly result in a bad user experience. If your content is not providing real value or if your website needs an on-page SEO overhaul, then you have to do it.

With all this said the June 2019 Broad Core update didn’t focus on just one specific ranking factor.

What goes around comes back around as they say, so if you or your previous SEO team used black or gray hat techniques and got away with it in the past, then you are just experiencing a delayed consequence of what you’ve been doing. And if you’ve been hit, sadly there’s no quick fix you can do to bounce back to your position prior to this.

However, these movements in rankings are not permanent. You can still be able to pull up your rankings if you clean up your site, improve user experience, and of course, continue to have a diverse and clean backlink profile.

In line with that, what are the exact steps that you can do to recover? We revisited Google’s guidance on how to build a high-quality site and webmaster guidelines to find out.

Refine your website’s quality:

A checklist for a steady recovery

Google says they have over 200 ranking factors, but essentially, what they’re asking from webmasters is to have a high-quality website. But what exactly is a high-quality site? Do we really need to kiss the black hat or gray hat tactics goodbye?

Well, if you don’t want to feel anxious every time there’s a Google Core update, that’s one route you can go. Moreover, based on Amit Singhal’s article on what a high-quality site is about, there’s one sure takeaway: don’t be reactive, but be proactive.

Don’t fixate yourself in the Penguin, Panda, and Medic algorithm because there are still other ranking factors that you can proactively improve on to make your website a high-quality site as you go. Don’t wait for a Google to announce another algorithm update before you work on improving your website, that’s just being reactive. Instead, proactively and intentionally improve your website for better user experience.

To further understand how to make a high-quality site, below is a bulleted list of our analysis (and additional questions) on Singhal’s blog post as it relates to the web today.

- Content E.A.T (Expertise, Authority, and Trustworthiness).

Is the author a real person who has the expertise and in-depth knowledge to make the information presented in the content trustworthy enough?

- Content Originality.

– Does the article have original content?

– Is the article a duplicated or spun content from other authority websites?

- Content Fairness.

– Does the article have an unbiased approach to the said topic?

– Does it show a tendency/intent to malign information in favor of only one party?

- Content Quality.

– Does the article have grammar or factual errors?

– Does the article provide real value to its readers? Or does it appear to be written in haste?

– Does the article provide helpful insights or specifics that values its audience’s time?

– Does it show information that is beyond obvious?

– Are the links within the article helpful and valuable?

– Is the article easy to read and to navigate?

- Website E.A.T.

– Is the website a known authority to discuss said topics or concepts?

– Do they regularly publish high-quality content to become an authority in the said area?

– Does the website have enough social authority to make it’s content worth sharing?

– If not, is they’re content high-quality enough to make it worth sharing?

– Do you believe the content is high-quality enough to be printed in recognized publications?

– Does the website have real business information, contact page, etc?

– Does your website have public Whois information?

– Does the website have a good domain authority/rank?

- Website Quality.

– Does the website produce duplicate content in an attempt to deceive the search engines?

– Is the internal linking within the content correct?

– Are these interlinked pages regularly updated?

– Are you redirecting your users to the correct pages they’re looking for?

– Do you customize your 404 pages to lead your audience to the content they’re looking for?

– Does the website have too much low-quality content?

– Does the page have way too many ads that it distracts the readers?

- Website Speed.

– Is the website’s load time fast? Does it pass Google’s Page Speed Insights standards?

– Are the images and media being used optimized for faster load time?

- Website Security.

– Is the website HTTPS?

– Would your users be confident enough to leave their credit card information on your site?

– As the website owner would you be confident to do that as well?

- Mobile responsiveness.

– Is the website optimized for mobile users?

– Are the texts readable on mobile?

– Does it load up fast for mobile users?

If you are able to answer above-mentioned questions positively, you are highly likely to recover from your ranking and traffic loss over time. If you are a new website, constantly aim to produce high-quality and valuable content, and Google will slowly pick up on your efforts.

Remember, one high-ranking article is not enough. Once you have enough traffic to get attention on your other content, you will be able to continually grow your website’s audience. Publish and update your content regularly to keep the velocity of your traffic’s organic growth.

While you still have the momentum, make good use of it.

Clean up your act:

Following Google Webmaster guidelines

Aside from low-quality sites, Google is also not fond of cheaters. If you want to stay in the game, play by their rules. If you want to avoid any possible consequences on the upcoming algorithm rollouts, here is the list of Google webmaster guidelines that will lead you to the right path:

1. No link schemes. Your link profile is as important as your content. If Google finds out you have links coming from illegitimate sources, you will most likely say goodbye to search results forever. Whether it’s public or private, PBNs are only there for the short term gain.

2. Don’t scrape content. Don’t plagiarize other website’s content and claim it as your own.

3. Don’t spin content. Stop re-writing articles, instead find a way to stand out and add more value to the current high-ranking articles.

4. Cloaking. Are your readers seeing the exact search result they clicked for or are you redirecting them in a different unrelated content in order to cheat on your ranking?

5. Wrong use of redirects. Don’t confuse the readers and don’t confuse the search engine. Doing this will wreak havoc on your rankings. Don’t 301 redirect a 404 page to your homepage, Google can spot that trick now.

6. Prevent user-generated spam. If you have a comments section in your blog or website, always monitor and remove spammy links.

7. Constant monitoring and removal of hackers. Maintain a robust and secure system or server and continuously aim to make your website safer.

8. Pages with viruses or phishing software. Don’t put malware or viruses in your website.

9. Stop using doorway pages. Always ensure each page on your domain gives value to your users.

Conclusion

Google is getting smarter by the day. Don’t rely on quick fixes or algorithm tweaks because sooner or later, Google will find out about them. Google will experience hiccups every now and then but improving the quality of user experience have always been their priority.

Although Google is still a business at the end of the day, we all want an internet where our need for helpful and reliable information is easily satisfied. We can’t go back to the dark era of the web where webmasters only think of their own gain, throwing our valuable time down the drain.

We’ve seen how Yahoo, AOL, and the rest of the search engine giants in the past failed because they didn’t prioritize user experience.

After all, don’t we all want a fair internet where the right information is easy to find?

We hope we were able to shed light on your questions especially on how you can recover from the Google updates not just in 2019, but also for years to come.

Do you have any ideas to share? Did you also get hit by the latest algorithm update? What were your corrective actions? Share it with us in the comments section and we’ll try to engage with you as much as possible!